Hyperrealistic AI video isn’t science-fiction anymore. OmniHuman-1—the hypothetical (but technologically plausible) engine that can conjure convincing digital humans from a few lines of text—shows just how thin the line between synthetic and authentic has become. This article unpacks the technology’s ethical implications, surveys its societal impact, and offers concrete guidance for creators, regulators, educators and everyday citizens who now share a media reality where “seeing” is no longer “believing.”

The Rise of OmniHuman-1 and Hyperrealistic AI

OmniHuman-1 builds on 2025-era breakthroughs—transformer-based diffusion models, real-time motion synthesis, RLHF fine-tuning and multimodal pipelines that stitch image, audio and 3-D animation into seamless video. Compared with earlier deepfake tools, OmniHuman-1 is:

- Faster – minutes instead of hours.

- Frictionless – minimal input photos or even text prompts.

- Truly lifelike – micro-expressions, skin translucency, natural cadence.

Potential upsides span education, accessibility and entertainment, but the same fidelity unlocks unprecedented deception.

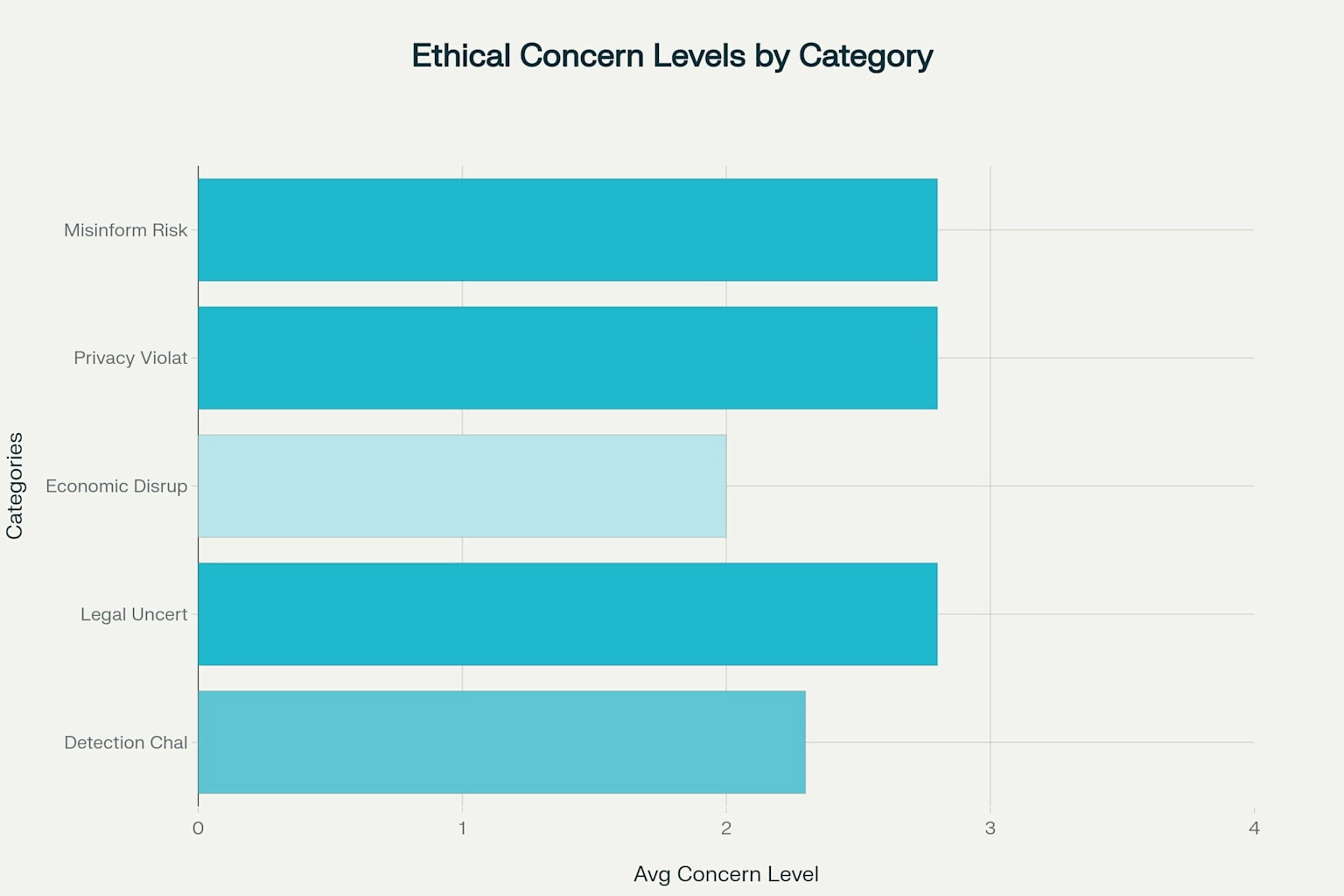

Core Ethical Dilemmas of OmniHuman-1

Misinformation & the “Weaponization of Reality”

- Fabricated political speeches, “eyewitness” war footage and cloned news anchors accelerate disinformation at industrial scale.

- The liar’s dividend lets real wrongdoers dismiss genuine evidence as fake, eroding shared truth.

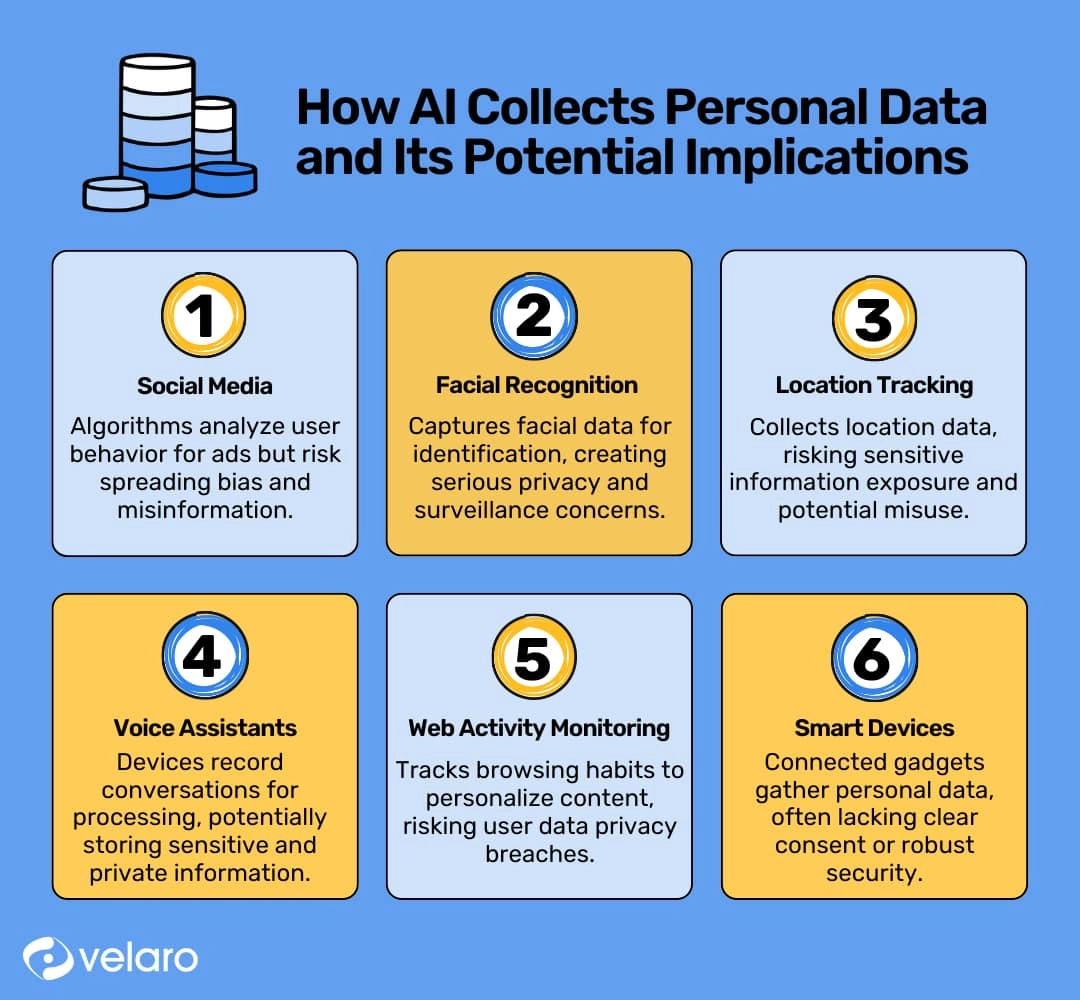

Privacy, Consent and Human-Likeness Rights

- Anyone’s public selfies can seed a convincing avatar—no permission needed.

- Non-consensual deepfake pornography already accounts for ~98 % of deepfake videos; OmniHuman-1 would amplify the harm.

- Biometric data becomes a tradeable commodity, challenging the very idea of personal autonomy.

Accountability Gaps

Who is liable when a synthetic CEO orders a fraudulent wire transfer? Developer, user or platform? Existing doctrines (defamation, right of publicity, copyright) only partially cover these scenarios.

Societal and Psychological Ripple Effects

Trust Deficit & Democratic Risk

Elections become contests of narrative authenticity. Studies already show public confidence in media at record lows; omnipresent deepfakes could tip skepticism into cynicism.

Individual Harm & Mental Health

Victims of synthetic defamation or deepfake porn report trauma, social withdrawal and career damage. Content moderators face vicarious stress assessing ever-more realistic fakes.

Shifts in Human Relationships

Hyper-responsive AI avatars may meet emotional needs without the messiness of real people, risking “empathy atrophy” and altered expectations of intimacy.

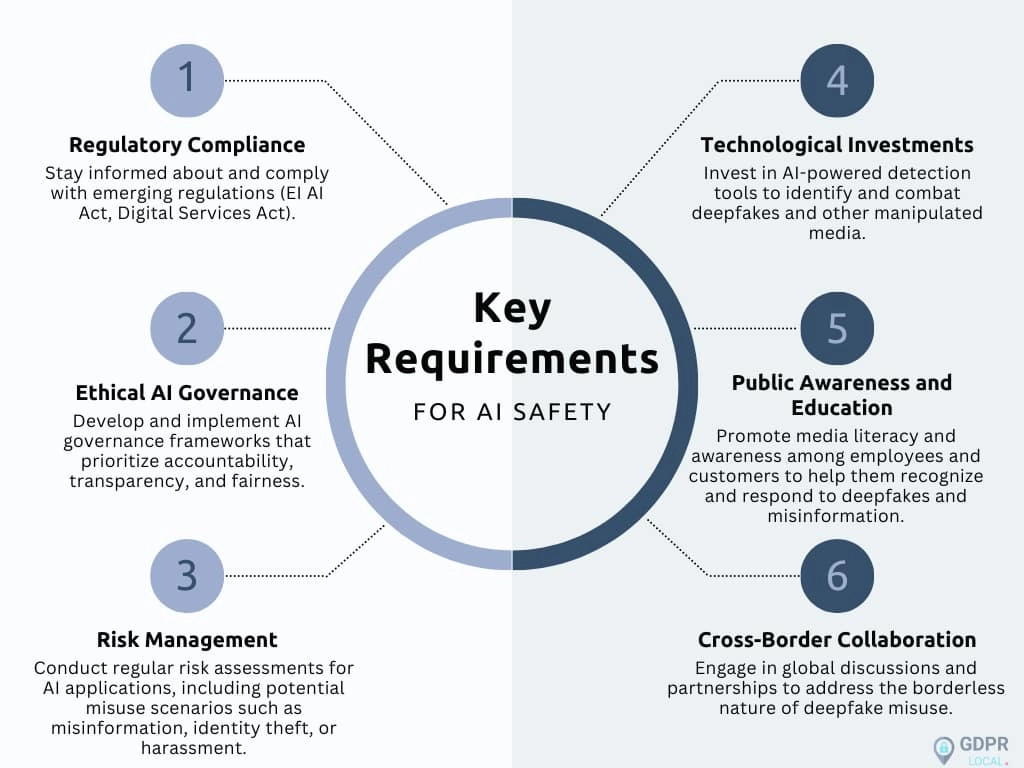

The Legal and Regulatory Landscape in 2025

- United States – Patchwork state laws (e.g., California AB 602) and proposed federal bills (NO FAKES Act) address election interference and intimate-image abuse but leave enforcement gaps.

- European Union – The forthcoming EU AI Act mandates labeling and watermarking of manipulated media and imposes risk-tiered obligations on providers.

- China – “Deep Synthesis” regulations require provenance watermarks, user ID checks and algorithm filings.

- Cross-border enforcement remains difficult; bad actors route data through jurisdictions with laxer rules.

Technical and Educational Mitigations

Detection & Provenance

- AI-based detectors (CNNs, vision transformers) spot artifacts, but in-the-wild accuracy hovers near coin-flip rates.

- Content provenance standards like C2PA embed cryptographic metadata; watermarks such as Google’s SynthID mark AI outputs—though both can be stripped or spoofed.

Media & Digital Literacy

- Integrate “deepfake hygiene” into school curricula: verify source, reverse-image search, triangulate with trusted outlets.

- Public campaigns akin to anti-phishing training can boost baseline skepticism without descending into nihilism.

Stakeholder-Specific Guidance

For Developers & Platforms

- Adopt ethics-by-design: consent validation, bias audits, watermarking on by default, rate-limits on sensitive face swaps.

- Conduct red-team assessments before release; publish transparency reports.

For Policymakers

- Close the “commitment-regulation gap” with clear liability rules and safe-harbor incentives for compliant platforms.

- Support R&D in robust detection and authenticity infrastructure; fund interdisciplinary watchdog bodies.

For Educators & Journalists

- Teach source-triangulation and AI literacy; require disclosure when synthetic media is used.

- Establish editorial standards: no synthetic imagery without context labels; archive original assets for verification.

For Citizens

- Protect your biometric trail—restrict public posting of high-resolution face shots.

- When in doubt, treat viral sensational clips as unverified until proven real.

Looking Ahead—Building a Trust Infrastructure

OmniHuman-1 forces a societal choice: double down on authenticity systems or drift into epistemic free-fall. Transparent AI labels, adaptive governance, international cooperation and a culture of verification form the scaffolding for trust in a synthetic age.

Conclusion

OmniHuman-1 exemplifies both the promise and peril of hyperrealistic AI. Its capacity to entertain, educate and empower is matched by its potential to mislead, exploit and destabilize. By marrying proactive technical safeguards, robust legal frameworks and a new social contract of digital authenticity, we can harness OmniHuman-1’s creative power while defending the foundations of truth and human dignity. The challenge is urgent—but with informed, collective action it is still solvable.

FREQUENTLY ASKED QUESTIONS (FAQ)

QUESTION: How is OmniHuman-1 different from older deepfake software?

ANSWER: Traditional deepfake tools splice a target face onto existing footage; OmniHuman-1 generates a full-body, photorealistic human from scratch, animates it in real time, and syncs voice, gestures and lighting—making detection dramatically harder.

QUESTION: Can I legally stop someone from making an OmniHuman-1 video of me?

ANSWER: In many jurisdictions you can pursue claims under right-of-publicity, privacy or defamation laws, but protections vary. Some U.S. states and the EU AI Act require consent for commercial use of your likeness, yet enforcement across borders remains inconsistent.

QUESTION: Will watermarking solve the deepfake problem?

ANSWER: Watermarking (e.g., SynthID, C2PA) is vital for provenance, but determined bad actors can remove or mask marks. Watermarks work best as part of a layered defense that includes robust detection, platform moderation and public literacy.

QUESTION: What psychological effects could arise from widespread AI avatars?

ANSWER: Victims of non-consensual fakes face trauma and reputational harm. More broadly, pervasive synthetic personas may erode empathy, create unrealistic relationship expectations and fuel general distrust of visual evidence.

QUESTION: What immediate steps can I take to spot a hyperrealistic AI video?

ANSWER: Pause and check contextual clues: verify the source account, look for abrupt lighting shifts, unnatural eye reflections, lip-sync mismatches, inconsistent shadows, and corroborate the event with reputable outlets before sharing.